Who is Tim Rocktäschel?

Tim Rocktäschel got his start in NLP research, earning a PhD from University College London. Initially, his research focused on knowledge representation and textual entailment, until he became disillusioned with the static nature of the data sets and tired of “chasing scores on a leaderboard.” Tim’s transition to the field of reinforcement learning was inspired by open-source work being done at DeepMind and OpenAI, and his postdoc at Oxford enriched his knowledge base.

The ultimate objective of Tim’s research is to train reinforcement learning agents to generalize their behavior and be able to adapt to novel situations. In the past, models were trained in limited environments; for example, the worlds created by Atari and other games are largely deterministic, unlike the real world.

“So really my work focuses on how we can move closer towards these real-world problems. How can we drop some of these simplifying assumptions that are baked into the environment simulators driving research?”

Training Reinforcement Learning Models in Game Environments

Historically, researchers exploited the simpler assumptions innate in static games to train deep learning models with better results. The idea was to gradually remove constraints and allow the models to adapt over time closer to the unconstrained and complex real world.

“We need to gradually remove [constraints] to be able to develop general methods so that we can learn something generally about training agents that can do things for real-world tasks.”

Tim emphasized the importance of recognizing the assumptions researchers write into their designed reinforcement learning environments. As with many other areas of neural network research, there’s a danger that models will pick up on correlations associated with the variable they’re looking for instead of learning to distinguish the actual variable. For example, a model learning to distinguish cancer from a radiology scan associates a pen mark on the image with a positive cancer correlation, and can distinguish this correctly without actually learning to discern cancer-associated content from the actual scan. Reinforcement learning models can similarly become overfitted to the specific environment they’re trained in, which makes their behavior difficult to generalize.

One way to prevent overfitting is by creating a procedurally generated environment, where every iteration of a game is different. Minecraft is one example of an environment that changes in some random ways every time it’s reloaded, and some researchers are using this to train and test reinforcement learning agents.

Another issue in finding the right training environment is balancing between detail and training speed. There are many games like Minecraft that are rich in detail but slow to train against due to this richness, or simpler games with a limited amount of richness for which the opposite is true. Tim and his team were on the hunt for a game that was both rich in terms of the interactions it offered, but against which models could be trained quickly.

NetHack: The Reinforcement Learning Environment

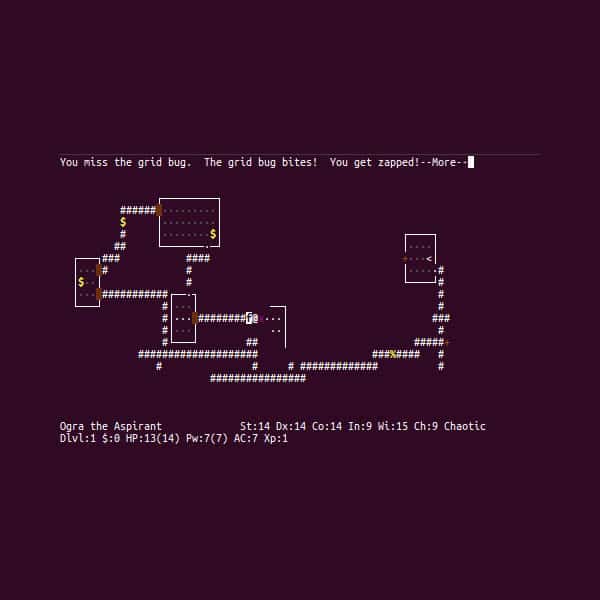

Dungeon crawler games were a great fit, as they offered a complex environment with a number of different monsters and items that were randomly chosen which could be played in simpler, lower resolution environments. Tim ended up choosing NetHack, an ASCII-rendered single player fantasy game. Originally created in 1987, the overall objective is to retrieve an amulet kept in the lowest dungeon levels and deliver it to a deity on the celestial planes.

Image from NetHack Wikipedia

The game features fifty dungeon levels and five elemental planes, and requires 50,000 steps to win. On top of that, each action is stochastically governed by a dice roll, a bit like Dungeons & Dragons. This introduces uncertainty of the outcome that more closely resembles the real world.

The parameters for the game are created by a combination of a seed that determines the first dungeon and a random number generator that determines the outcome of many other complex actions. When the player dies, it re-spawns at the beginning of the game. In this way, the game ensures that every play through is unique, which helps the reinforcement learning model learn better.

Tim initially thought this game would be too complex for a “vanilla” reinforcement learning model to learn. Early attempts made it through 5 or 6 levels, and the lucky ones worked their way up to the 10-15 level range. For context, it would take a human who was adept at video games about a week of regular playing to achieve that 10-15 level range. Tim himself played a simpler version of this game on his mobile phone on the train between Oxford and London for two years before finally finishing it.

Training Reinforcement Learning Agents: Reward functions in NetHack

While there is a score generated by the game which is a combination of levels completed and monsters killed, this wasn’t the best reward function on which to train a model.

“If you get a reward for going down a dungeon level and killing stuff, you get an agent that just, completely berserk tries to kill everything in their path, and just tries to run down the dungeon level as quickly as possible without caring about [trying] to get stronger in the long run.”

Tim ended up rewarding behaviors that mimicked exploring the dungeon space, searching for items and cataloguing novelty, similar to what motivates many human players. Ultimately, the most effective goal for the model was to expand its knowledge of the environmental dynamics that governed the dungeon.

With this reward function, Tim and his team saw models learning to go deeper in the dungeon, find secret doors, avoid challenging difficult monsters, and even eat food to increase their stamina.

MiniHack: A Simpler Sandbox Environment

While NetHack is a massive game, MiniHack is a simpler version of it that allows researchers to isolate more specific behaviors. This enables new research directions in areas like curriculum learning, which attempts to speed up training by teaching models a sequence of increasingly complex tasks.

An agent learns easy levels before moving on to more challenging ones. In order to optimize the agent’s learning abilities, they are trained at their “frontier”, where they are working just at the limit of what they can do.

One useful way to track an agent’s learning is with value error. Prior to exposing an agent to a new level, Tim and his team have the agent predict how well it thinks it will perform, and then compare the agent’s prediction to its actual performance.

Tim mentioned that the most useful value errors were the larger ones. For example, an agent that thought it would perform poorly that performed well or an agent that expected to perform well but played poorly were more informative than those that accurately predicted their performance.

Unsupervised Environment Design

Another approach Tim explored was unsupervised environment design. In this situation, there’s a student program and a teacher program. The teacher program designs environments for the student that become progressively more difficult so the student can learn, again following that “curriculum” progression.

In the two experiments Tim mentioned, where different models were trained on mazes and 2D car racing tracks, the agents’ performance generalized pretty well to new mazes and tracks, respectively.

The Future of Reinforcement Learning

Tim believes the future of reinforcement learning will incorporate more of this unsupervised environment design. He also hopes to see more complex, rich, and interesting worlds where models will train, maybe even in the realm of Minecraft.

Tim hopes to learn more about creating agents that are intrinsically motivated to expand their knowledge of their environments through exploration.

“How do we make sure agents just get excited about expanding their knowledge about certain dynamics in the environment?”

Be sure to check out the NetHack Challenge, which Tim and his team will be running at NeurIPS this year.