A few weeks ago I had the opportunity to visit Siemens’ Spotlight on Innovation event in Orlando, Florida. The event aimed to bring together industry leaders, technologists, local government leaders, and other innovators for a real-world look at the way technologies like AI, cybersecurity, IoT, digital twin, and smart infrastructure are helping businesses and cities unlock their potential.

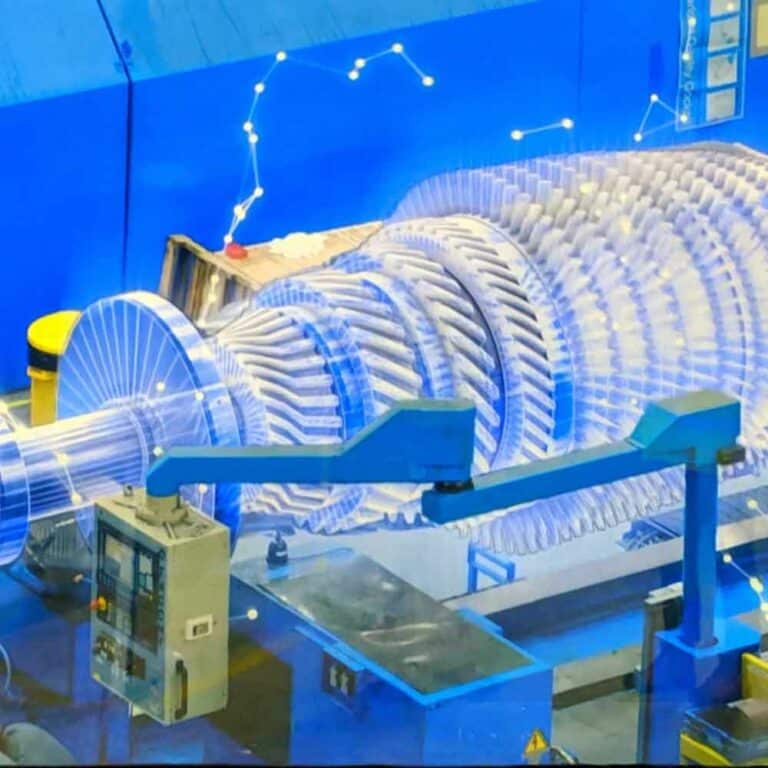

Siemens put together a nice pre-event program the day before the formal conference which included a tour of their Gamesa Wind Turbine Training Center. We got a peek into the way these machines are assembled, repaired, and managed.

As expected, wind turbines are increasingly being fitted with sensors that, when coupled with machine learning algorithms, allow the company to optimize their performance and do predictive maintenance.

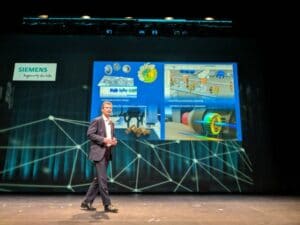

AI figured prominently into the discussions at the main conference and the highlight for me was Norbert Gaus, head of R&D at Siemens, presenting an overview of the four main AI use cases that the company is interested in:

- Generative product design

- Automated product planning

- Adaptable autonomous machines

- Real-time simulation and digital twin

He covered, though not in much detail, examples in each of these areas. (My Industrial AI ebook is a good reference for more on the opportunities, challenges, and use cases in this space.)

Gaus also provided an interesting overview of the systems and software tools the company was building for internal and customer/partner use. These spanned AI-enabled hardware, industry-specific algorithms and services, AI development tools and workflows, pretrained AI models and software libraries, and industrial knowledge graphs.

I was able to capture a couple of really interesting conversations with Siemens applied AI research engineers about some of the things the company is up to.

Over on Twitter you can check out a short video I made with Siemens engineer Ines Ugalde where she demonstrates a computer vision powered robot arm that she worked on that uses the deep learning based YOLO algorithm for object detection and the Dex-Net grasp quality prediction algorithm designed in conjunction with Ken Goldberg’s AUTOLAB at UC Berkeley, with all inference running in real time on an Intel Movidius VPU.

I also had an opportunity to interview Batu Arisoy for Episode 281 of the podcast. Batu is a research manager with the Vision Technologies & Solutions team at Siemens Corporate Technology.

Batu’s research focus is solving limited-data computer vision problems. We cover a lot of ground in our conversation, including an interesting use case where simulation and synthetic data are used to recognize spare parts in place, in cases where the part cannot be isolated.

“The first way we use simulation is actually to generate synthetic data and one great example use case that we have developed in the past is about spare part recognition. This is a problem if you have a mechanical object that you deploy in the field and you need to perform maintenance and service operations on this mechanical functional object over time.

In order to solve this problem what we are working on is using simulation to synthetically generate a training data set for object recognition for large amount of entities. In other words, we synthetically generate images as if these images are collected in real world from an expert and they’re annotated from an expert and this actually comes for free using the simulation.

[…]We deployed this for the maintenance applications of trains and the main goal is a service engineer goes to the field, he takes his tablet, he takes a picture, then he draws a rectangle box and the system automatically identifies what is the object of interest that the service engineer would like to replace and in order to make the system reliable we have to take into consideration different lighting conditions, texture, colors, or whatever these parts can look like in a real world environment.”

There’s a ton of great detail in this conversation. In particular, we dive into quite a few of the details behind how this works, including a couple of methods that they apply which were published in his group’s recent CVPR papers, including Tell Me Where to Look, which introduced the Guided Attention Inference Network, and Learning Without Memorizing.

Definitely check out the full interview!